Podcast

Conference on the Political Economy of AI Podcast Episodes

Check out the podcast episodes from the Allen Lab for Democracy Renovation’s Conference on the Political Economy of AI to glean insights from each panel.

Commentary

Will AI-assisted polls soon replace more traditional techniques?

Public polling is a critical function of modern political campaigns and movements, but it isn’t what it once was. Recent US election cycles have produced copious postmortems explaining both the successes and the flaws of public polling. There are two main reasons polling fails.

First, nonresponse has skyrocketed. It’s radically harder to reach people than it used to be. Few people fill out surveys that come in the mail anymore. Few people answer their phone when a stranger calls. Pew Research reported that 36% of the people they called in 1997 would talk to them, but only 6% by 2018. Pollsters worldwide have faced similar challenges.

Second, people don’t always tell pollsters what they really think. Some hide their true thoughts because they are embarrassed about them. Others behave as a partisan, telling the pollster what they think their party wants them to say – or what they know the other party doesn’t want to hear.

Despite these frailties, obsessive interest in polling nonetheless consumes our politics. Headlines more likely tout the latest changes in polling numbers than the policy issues at stake in the campaign. This is a tragedy for a democracy. We should treat elections like choices that have consequences for our lives and well-being, not contests to decide who gets which cushy job.

AI could change polling. AI can offer the ability to instantaneously survey and summarize the expressed opinions of individuals and groups across the web, understand trends by demographic, and offer extrapolations to new circumstances and policy issues on par with human experts. The politicians of the (near) future won’t anxiously pester their pollsters for information about the results of a survey fielded last week: they’ll just ask a chatbot what people think. This will supercharge our access to realtime, granular information about public opinion, but at the same time it might also exacerbate concerns about the quality of this information.

I know it sounds impossible, but stick with us.

Large language models, the AI foundations behind tools like ChatGPT, are built on top of huge corpuses of data culled from the Internet. These are models trained to recapitulate what millions of real people have written in response to endless topics, contexts, and scenarios. For a decade or more, campaigns have trawled social media, looking for hints and glimmers of how people are reacting to the latest political news. This makes asking questions of an AI chatbot similar in spirit to doing analytics on social media, except that they are generative: you can ask them new questions that no one has ever posted about before, you can generate more data from populations too small to measure robustly, and you can immediately ask clarifying questions of your simulated constituents to better understand their reasoning

Researchers and firms are already using LLMs to simulate polling results. Current techniques are based on the ideas of AI agents. An AI agent is an instance of an AI model that has been conditioned to behave in a certain way. For example, it may be primed to respond as if it is a person with certain demographic characteristics and can access news articles from certain outlets. Researchers have set up populations of thousands of AI agents that respond as if they are individual members of a survey population, like humans on a panel that get called periodically to answer questions.

The big difference between humans and AI agents is that the AI agents always pick up the phone, so to speak, no matter how many times you contact them. A political candidate or strategist can ask an AI agent whether voters will support them if they take position A versus B, or tweaks of those options, like policy A-1 versus A-2. They can ask that question of male voters versus female voters. They can further limit the query to married male voters of retirement age in rural districts of Illinois without college degrees who lost a job during the last recession; the AI will integrate as much context as you ask.

What’s so powerful about this system is that it can generalize to new scenarios and survey topics, and spit out a plausible answer, even if its accuracy is not guaranteed. In many cases, it will anticipate those responses at least as well as a human political expert. And if the results don’t make sense, the human can immediately prompt the AI with a dozen follow-up questions.

When we ran our own experiments in this kind of AI use case with the earliest versions of the model behind ChatGPT (GPT-3.5), we found that it did a fairly good job at replicating human survey responses. The ChatGPT agents tended to match the responses of their human counterparts fairly well across a variety of survey questions, such as support for abortion and approval of the US Supreme Court. The AI polling results had average responses, and distributions across demographic properties such as age and gender, similar to real human survey panels.

Our major systemic failure happened on a question about US intervention in the Ukraine war. In our experiments, the AI agents conditioned to be liberal were predominantly opposed to US intervention in Ukraine and likened it to the Iraq war. Conservative AI agents gave hawkish responses supportive of US intervention. This is pretty much what most political experts would have expected of the political equilibrium in US foreign policy at the start of the decade but was exactly wrong in the politics of today.

This mistake has everything to do with timing. The humans were asked the question after Russia’s full-scale invasion in 2022, whereas the AI model was trained using data that only covered events through September 2021. The AI got it wrong because it didn’t know how the politics had changed. The model lacked sufficient context on crucially relevant recent events.

We believe AI agents can overcome these shortcomings. While AI models are dependent on the data they are trained with, and all the limitations inherent in that, what makes AI agents special is that they can automatically source and incorporate new data at the time they are asked a question. AI models can update the context in which they generate opinions by learning from the same sources that humans do. Each AI agent in a simulated panel can be exposed to the same social and media news sources as humans from that same demographic before they respond to a survey question. This works because AI agents can follow multi-step processes, such as reading a question, querying a defined database of information (such as Google, or the New York Times, or Fox News, or Reddit), and then answering a question.

In this way, AI polling tools can simulate exposing their synthetic survey panel to whatever news is most relevant to a topic and likely to emerge in each AI agent’s own echo chamber. And they can query for other relevant contextual information, such as demographic trends and historical data. Like human pollsters, they can try to refine their expectations on the basis of factors like how expensive homes are in a respondent’s neighborhood, or how many people in that district turned out to vote last cycle.

Today, humans fill out the surveys and computers fill in the gaps. In the future, it will be the opposite.Aaron Berger, Eric Gong, Nathan Sanders, & Bruce Schneier

AI polling will be irresistible to campaigns, and to the media. But research is already revealing when and where this tool will fail. While AI polling will always have limitations in accuracy, that makes them similar to, not different from, traditional polling. Today’s pollsters are challenged to reach sample sizes large enough to measure statistically significant differences between similar populations, and the issues of nonresponse and inauthentic response can make them systematically wrong. Yet for all those shortcomings, both traditional and AI-based polls will still be useful. For all the hand-wringing and consternation over the accuracy of US political polling, national issue surveys still tend to be accurate to within a few percentage points. If you’re running for a town council seat or in a neck-and-neck national election, or just trying to make the right policy decision within a local government, you might care a lot about those small and localized differences. But if you’re looking to track directional changes over time, or differences between demographic groups, or to uncover insights about who responds best to what message, then these imperfect signals are sufficient to help campaigns and policymakers.

Where AI will work best is as an augmentation of more traditional human polls. Over time, AI tools will get better at anticipating human responses, and also at knowing when they will be most wrong or uncertain. They will recognize which issues and human communities are in the most flux, where the model’s training data is liable to steer it in the wrong direction. In those cases, AI models can send up a white flag and indicate that they need to engage human respondents to calibrate to real people’s perspectives. The AI agents can even be programmed to automate this. They can use existing survey tools—with all their limitations and latency—to query for authentic human responses when they need them.

This kind of human–AI polling chimera lands us, funnily enough, not too distant from where survey research is today. Decades of social science research has led to substantial innovations in statistical methodologies for analyzing survey data. Current polling methods already do substantial modeling and projecting to predictively model properties of a general population based on sparse survey samples. Today, humans fill out the surveys and computers fill in the gaps. In the future, it will be the opposite: AI will fill out the survey and, when the AI isn’t sure what box to check, humans will fill the gaps. So if you’re not comfortable with the idea that political leaders will turn to a machine to get intelligence about which candidates and policies you want, then you should have about as many misgivings about the present as you will the future.

And while the AI results could improve quickly, they probably won’t be seen as credible for some time. Directly asking people what they think feels more reliable than asking a computer what people think. We expect these AI-assisted polls will be initially used internally by campaigns, with news organizations relying on more traditional techniques. It will take a major election where AI is right and humans are wrong to change that.

Podcast

Check out the podcast episodes from the Allen Lab for Democracy Renovation’s Conference on the Political Economy of AI to glean insights from each panel.

Feature

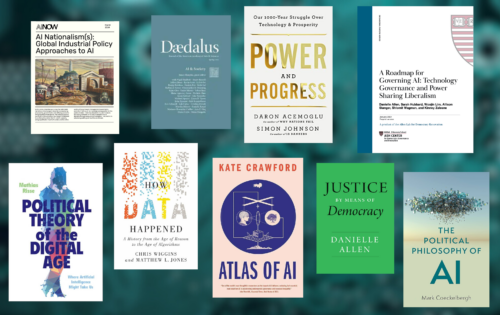

This list, curated by the GETTING-Plurality Research Network at the Allen Lab for Democracy Renovation, highlights a mix of foundational texts and new thinking on the timely issue of how AI will impact democracy, especially as we head into election season.

Feature

Experts gathered at the Allen Lab conference to examine the incentives and structures of AI development, as well as to discuss the past, present, and potential future of steering AI towards better serving the public interest.