Podcast

Preparing for the Election Meltdown … or Not

Co-hosts Archon Fung and Stephen Richer weigh conflicting predictions for the 2026 midterms and explore how to safeguard a free and fair election.

Q+A

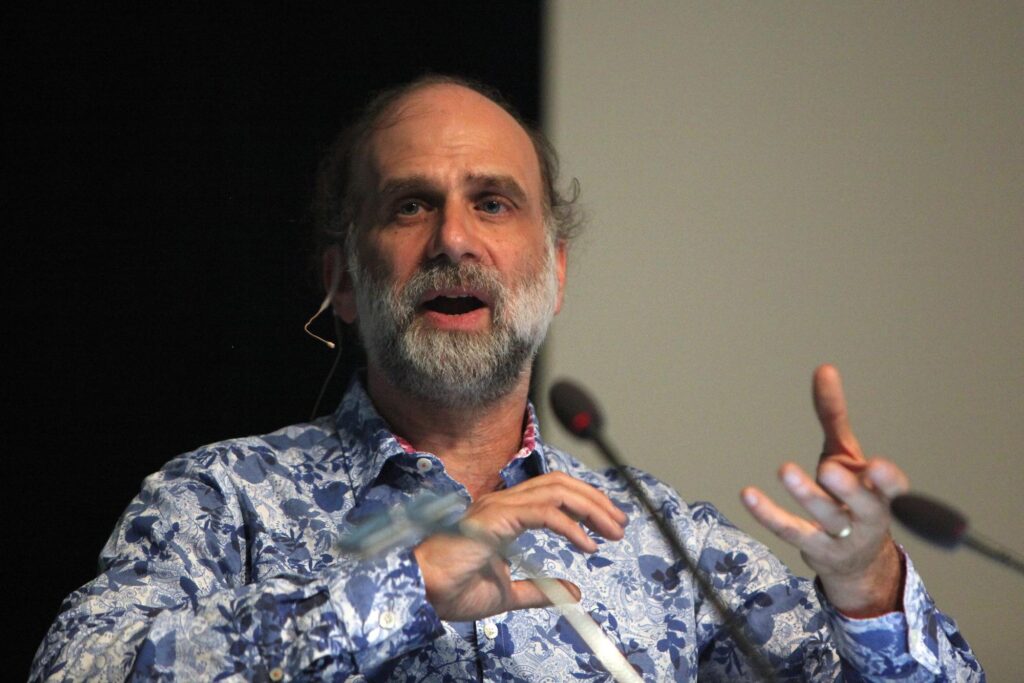

In a new book, Bruce Schneier details how tricks, exploitations, and loopholes are benefiting those in power — and how a ‘hacking’ mindset can help us set things right.

From tax codes to the NFL rulebook, the world is made up of procedures, systems, and settings — all of which can be hacked.

In his newest book “A Hacker’s Mind: How the Rich and Powerful Bend Society’s Rules, and How to Bend Them Back,” cybersecurity expert and HKS faculty affiliate Bruce Schneier asks readers to expand their simple definition of hacking beyond just computer and IT systems but to consider how nearly everything around us can be hacked — for better or worse. With chapters covering everything from airline frequent flier miles to elections and redistricting, Schneier pushes us to examine how people use and abuse system vulnerabilities to get ahead — and how by adopting a hacking mindset, we can find and fix these weaknesses.

To better understand how a hacking mentality can help us root out gaps in our governance systems and move to create a more equitable world, the Ash Center sat down with Schneier to learn more.

Ash: Since what qualifies as a “hack” is subjective, how do you define hacking in the book?

Schneier: A hack is something that a system permits but is unintended and unanticipated by its designers. I mean this both precisely and generally. A hack is an exploitation. It’s clever. Hacks subvert the rules of a system to the benefit of the hacker.

Traditionally, the word applies to computer systems — but it’s generalizable. What I try to do in my book is take the term out of those narrow confines out into the broader world.

As you write in the book, people have been hacking society’s systems throughout history. Can you give us some examples?

Some of the most fun I had in writing the book was finding the examples. Tax loopholes are an obvious one. They’re not illegal — the “code” allows them, but they are unintended and unanticipated exploitations of the tax code. And wherever there are sets of rules, and people who want an advantage, there are hacks. Curving your hockey stick was originally a hack; so was dunking in basketball. The filibuster is a hack, invented by Cato the Younger in 60 BCE. Selling indulgences by the Catholic church was a hack; so were all sorts of tricks to get around religious laws against usury. Mileage runs — ways to get lots of frequent flier miles at low cost — are a hack.

Remember, hacks are not against the rules. They are permitted, or at least the rules are silent about them.

You call out democracy as one of the areas where unchecked hacking can weaken systems. Can you give an example of a hack that undermines democracy?

There are many hacks that subvert the democratic process in the US. Gerrymandering is an example. It’s not necessarily against the rules, but it subverts the goals of the system. The legislators of many countries have delaying tactics that are hacks. In Japan, it’s known as “ox walking,” walking to vote so slowly that the vote never actually happens. Most voter suppression tactics are hacks — not specifically against the rules but subverting the goals of an election. So is deliberately running a third-party candidate with a similar name as one’s opponent to confuse voters (happens a lot outside of the US).

Are there any examples of hacks that you think have been good for democracy?

Our system of laws has been repeatedly hacked to give people more rights and more freedoms. This isn’t new. In 1763, the English law of trespass, which traditionally applied only to individuals who trespass on each other’s lands, was expanded to apply to the government as well. Government agents also cannot trespass onto individuals’ lands. That was a hack: It was an unintended and unanticipated effect of the law as originally conceived, but it became part of the law going forward.

Access to information is core to the functioning of a healthy democracy. You write about how cognitive hacking, used by advertisers and social platforms, can capture our attention and determine how we access or understand information. How can we counteract these types of vulnerabilities — things that are core to human behavior?

It’s hard, and in some cases impossible. When a new hack is discovered, whoever is in charge of the system has two options: They can patch the vulnerability and make the hack impossible or disallowed, or they can incorporate it into the system. Some tax loopholes are patched, some are not. In 1975, someone showed up on a Formula One racetrack with a six-wheeled car. Turns out the Formula One rules were silent on the number of wheels a car could have, but they were quickly patched to close that loophole. Some hacks become part of the system, like gerrymandering (to one degree or another), the filibuster, dunking in basketball. Cognitive hacks, which are tricks used against our own brains, are really hard to patch. Evolution works slowly, and we aren’t technologically advanced enough to modify our own brains. The best we can do is one, be aware of the tactics, and two, declare some of them illegal. This can be done today. For example, the Federal Trade Commission has the power to ban unfair and deceptive trade practices.

With the advent of Artificial Intelligence (AI), hacking by AI might not be preventable, but you argue that it could be governed. What kinds of governance systems might help prevent AI hacking?

Hacking is fundamentally a creative process, which makes it uniquely human. But AI systems can be creative, and it is probable that one day AIs will discover hacks. We can imagine feeding an AI the entire US tax code, or all the financial regulations, and giving it the goal of finding profitable hacks. This would upend a lot of our legal constraints that limit the damage hacks can do and is something I spend a lot of time on in the book.

Governance is hard here, because our systems of closing loopholes are human and were developed to deal with the human pace of hacking. We’re not ready as a society to respond to an AI that finds hundreds, or thousands, of new tax loopholes. What we need to think about is how to design government systems to be as agile as our computer systems. You patch your computers and phones weekly or monthly. Currently it can take years – or longer – to patch a tax loophole.

If readers adopt one practice or change their thinking from the book, what do you hope they’ll take away?

My goal is to give people a new way of thinking about how society’s rules work and how they fail. We in the computer world have developed a series of mitigation strategies to reduce the ill effects of hacking. Those strategies are broadly applicable, and I want people to understand that as well.

Podcast

Co-hosts Archon Fung and Stephen Richer weigh conflicting predictions for the 2026 midterms and explore how to safeguard a free and fair election.

Podcast

White House reporter Annie Linskey offers a closer look at how the Trump White House makes decisions and what recent actions reveal about its strategy.

Podcast

Jonathan Rauch joins the podcast to discuss why he now believes “fascism” accurately describes Trump’s governing style.

Additional Resource

Ensuring public opinion and policy preferences are reflected in policy outcomes is essential to a functional democracy. A growing ecosystem of deliberative technologies aims to improve the input-to-action loop between people and their governments.

Occasional Paper

In a new working paper, Crocodile Tears: Can the Ethical-Moral Intelligence of AI Models Be Trusted?, Allen Lab authors Sarah Hubbard, David Kidd, and Andrei Stupu introduce an ethical-moral intelligence framework for evaluating AI models across dimensions of moral expertise, sensitivity, coherence, and transparency.

Open Access Resource

Allen Lab for Democracy Renovation Senior Fellow Allison Stanger, in collaboration with Jaron Lanier and Audrey Tang, envision a post-Section 230 landscape that fosters innovation in digital public spaces using models optimized for public interest rather than attention metrics.